TL;DR: You can’t build an AI content auditor. You can build an AI content audit assistant that analyzes your site’s existing content, traffic data, and crawl data for opportunities. I’ll show you how I do it, from the inputs — ScreamingFrog, Google Analytics, DataForSEO — to the prompts and project.

This is a long post, so here’s a quick table of contents:

Your Tools

Step 1: Set up Claude and your Claude project

Step 2: Set up Zapier MCP and connect to Claude

Step 3: Run A ScreamingFrog Crawl

Step 4: Export your crawl data

Step 5: Grab one Google Analytics report

Step 6: Put Claude to work

Step 7: Review

Step 8: Refine your Claude project

Where to now?

The easiest marketing wins come from improving what you already have. Of course, you’re going to create new content to support new products and services, answer audience questions, or in an effort to spam the crap out of AIs and search engines. But updating and improving existing pages is just (chef’s kiss).

To do that, you audit existing content: Check out existing pages and find those that could generate more marketing oomph in the form of revenue, earned media discovery, owned media growth, or something else.

Back in the bad old days, in 2024, we’d find opportunities by scoring and assessing merged data for each page. You still should.

But that’s a lot of data. The best team ever is going to miss a few things.

AI can’t perform a comprehensive audit. But AI can help you catch stuff you might otherwise miss. I’ve been doing this for a while. Turns out, AI is a terrible marketing practitioner, but it’s a great content audit assistant, pulling together data from different sources and giving you additional ideas.

Here’s how I do it, using Claude, OpenAI, ScreamingFrog, DataForSEO, Google Search Console, and Google Analytics.

Your Tools

These are my favorites: A little AI, a bit of crawling, with a pinch of Google Sheets and some SEO data thrown in. You can mix and match as desired. Don’t worry, I’ll explain how to configure and use each one.

AI: Claude

You can use ChatGPT, Gemini, Claude, Ollama, or whatever the AI flavor of the month happens to be.

I’m gonna use Claude because:

- I’m used to it. I use Claude Code and a bunch of their other tools

- I’m not ready to plunk down $200/month for ChatGPT Pro

- I’m too lazy to use Gemini Developer Mode for this article

- I get my adrenaline where I can. I’m a 57-year-old white suburban American male. I have never been a rebel. I never will be. But using Claude feels somehow… subversive

Assume from now on that if I say “Claude,” I mean “the AI tool of your choice.”

Crawler: ScreamingFrog

ScreamingFrog is the key. You’ll use it to:

- Crawl your site

- Analyze your site’s internal link graph

- Extract embeddings using OpenAI

- Analyze semantic similarity using those embeddings

- Pull Search Console and Google Analytics data for each page on your site

You can use any crawler that can import results from Search Console and Google Analytics, and export results to Google Sheets, though: Sitebulb, for example. Or any crawler to which you can give Claude access.

That’s the beauty of this whole process: You can train the AI to analyze data regardless of its source.

Embeddings: OpenAI

Again, you can use other AI tools, but I like OpenAI’s embeddings toolset. Plus, it’s really easy to set up in ScreamingFrog. All you need is an OpenAI API key. Instructions below.

Zapier MCP server

MCP is Model Context Protocol.

You don’t need to understand what that means. Heck, I don’t understand what it means. As I understand it, MCP is a set of connectors that let your AI grab data and interact directly with third-party tools, using just a prompt. You don’t have to build any custom API stuff.

When it works, MCP is beautiful. It’s also a pain in the tuchus to set up: Some MCP servers are easy. Some are a nightmare. Some are randomly buggy or only work with one or two AIs.

Which is why you’ll want Zapier MCP. You can set up one Zapier MCP server, connect your AI, and then connect to third-party services like DataForSEO and Google Sheets through Zapier.

If there’s a Zapier connector, there’s a good chance it can connect to the Zapier MCP, too. That’s thousands of happy little connectors, just waiting to hear from you.

Performance Data: Google Search Console & Analytics

Two exceptions: I have yet to make a reliable MCP connection to Google Analytics or Search Console. On any service. It’s… vexing.. By “vexing” I mean “I ran screaming out of my office and needed even more therapy”. Instead, I import data using ScreamingFrog to grab page-by-page data, and I manually generate one other report.

To do that, you need access to Google Search Console and Google Analytics.

You’ll export all this data to Google Sheets in a folder of your choice. Unless your site’s so massive you’re using something like BigQuery, in which case, why are you even here? You probably know more about this than I do.

Optional: DataForSEO

If you want to add ranking keywords, ranking pages, and other SEO-centric data points, you can connect DataForSEO to your Zapier MCP server, and voilà – Claude has access.

Brief interlude: Be ready for bugs

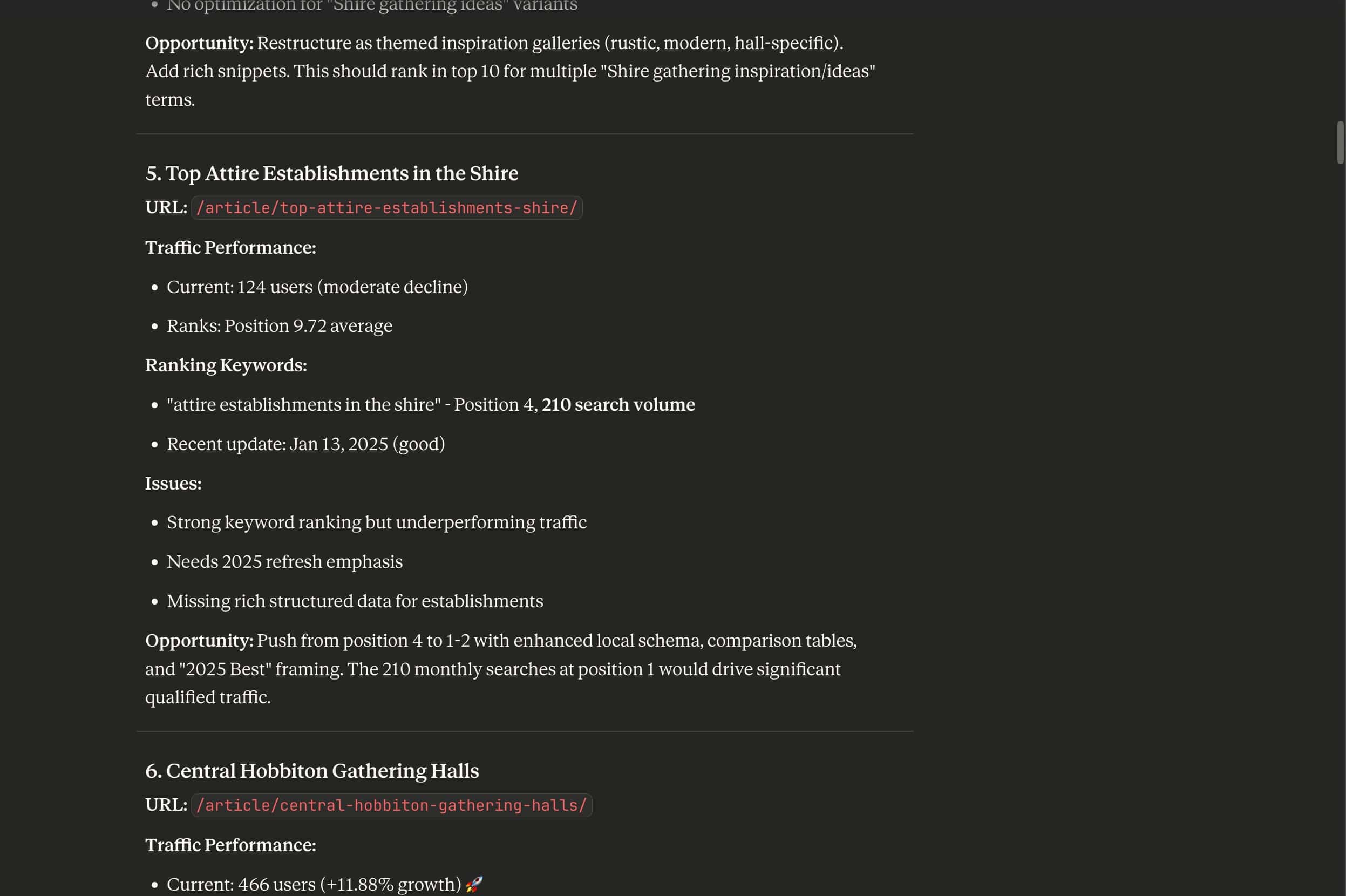

MCP and AI are not ready for primetime. OK, I’m being generous. These gadgets remind me of early versions of Excel – powerful, useful, and catastrophically unintelligent. AI tools, in particular, will time out, ignore instructions, and provide inconsistent results. Here’s a snippet of one conversation I had:

I get annoyed at Claude

It still paid off, and the tools constantly improve. Just be ready for a little frustration, and remember that Claude is an assistant, not a practitioner. You give it instructions. It goes and does stuff (mostly wrong). Then you give it more instructions. Then you review the result and incorporate it into your own work.

Step 1: Set up Claude and your Claude project

A Claude project lets you store instructions and context information in a persistent space, so that you can build and refine your assistant.

- Create a new Claude project (or a ChatGPT GPT, or a Gemini Gem)

- Add these instructions (just a suggestion!!! – write your own if you want):

- Add guidelines for what an “opportunity” is. In Claude, I recommend creating a Markdown document and adding it to your project files. I always start with this:

I set up a separate project for each brand, then tweak opportunities.md for that specific client. You could use a different opportunities.md file for each brand, instead.

Step 1a: Get an OpenAI API key

Even if you’re using Claude, you’ll want an OpenAI API key. You’ll use it to extract embeddings during the ScreamingFrog crawl.

The phrase “get an API key” makes me break out in hives, but this is a pretty simple process. I have yet to screw it up.

- Get an OpenAI account (not the same as ChatGPT)

- Go over here and click Create an API Key

I create a separate key for each Claude project. That’s why I put this step here. You don’t have to do that, but a lowly consultant like me needs to have API usage separate so I can get reimbursed by clients. Those Kit Kats don’t buy themselves.

Step 2: Set up the Zapier MCP server and connect to Claude

- Log in to your Zapier account. A free account will get you started

- Go to mcp.zapier.com

- Click New MCP Server

- Under Configure, add your tools: DataforSEO, Google Sheets, and Google Drive will get you what you need for this exercise (yes, Google Drive MCP is maddeningly unreliable, but it’s still helpful when it works, and Claude tells you when it fails)

- Get your connection information from Connect. Use that to connect to Claude, or ChatGPT, or Gemini (I’m going to stop saying all three – it’s exhausting). Zapier provides step-by-step instructions

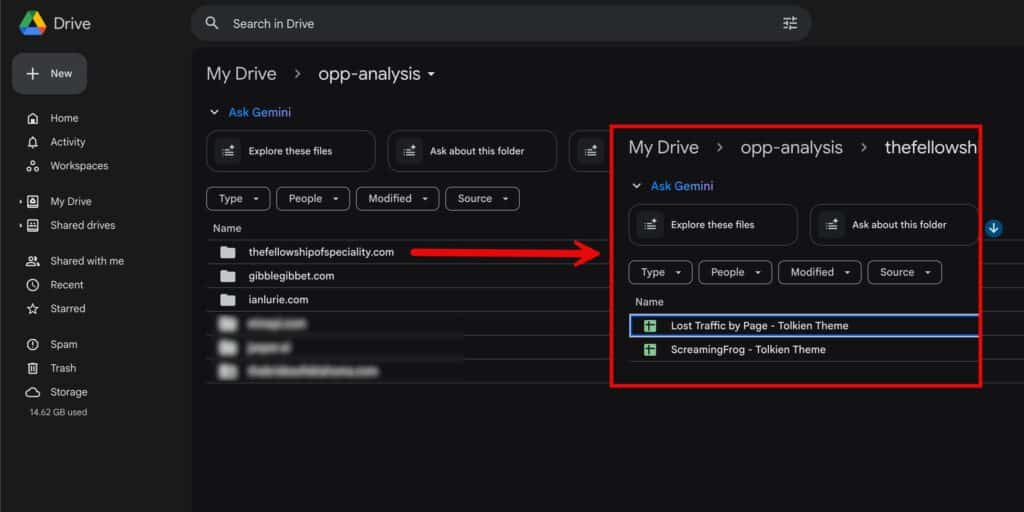

Step 2a: Create a folder that Zapier can read

This is how I organize things:

- Go to the Google Drive you shared with Zapier MCP

- Create a new folder. I called mine opp-analysis. You’ll see that I use that name in my Claude instructions, too. That’s important! Be consistent. Claude can’t read your mind. Yet

Step 3: Configure & run a ScreamingFrog crawl

Time for a crawl! You’ll use ScreamingFrog to grab all the usual SEO diagnostics stuff, plus embeddings, and Google Analytics data.

It pays to go through these instructions a few times. I inevitably forget to connect one API or another, then end up cursing while I re-run the entire crawl.

If you need details on any connections or configuration settings, check out ScreamingFrog’s documentation. Their docs are fantastic. If you can’t find what you need, the community is equally fantastic. Or just send me a note with the subject, “Ian, what the hell?!”.

Connections

First, you need to connect ScreamingFrog to all the various APIs. You do this in Crawl Config > API Access.

- Connect Google Analytics

- Connect Google Search Console

- Connect OpenAI (You’ll need your OpenAI API key)

- Optional: Connect to ahrefs or something else that’ll get you page-level rankings and links data. I use DataForSEO through the Zapier MCP server, so I skip this step

- Also optional: Connect to PageSpeed Insights. I’m a huge nerd. I always want page performance metrics. But I run PageSpeed locally, rather than through the API. On a large site, you run out of remote API calls in a hurry

Configuration

A few other settings:

- Set Crawl Config > Spider > Rendering to JavaScript. The defaults are fine

- In Crawl Config > Spider > Extraction make sure you’ve checked “JSON-LD,” “Store HTML,” and “Store Rendered HTML.” JSON-LD diagnostics are really helpful, and storing HTML makes later debugging easier

- Use Crawl Config > Content > Content Area to define exactly which part of the page should be used for word count, duplicate content analysis, and such. If you don’t know how to find classes, tags, and IDs, skip this step. It’s helpful but not essential

Important!!! (Step 4)

In Crawl Config > Content > Embeddings enable embedding functionality, then choose the “OpenAI: Extract embeddings from page content” prompt.

Let’s Gooooo

Somewhere, both my kids involuntarily cringed when I wrote that. Worth it.

In ScreamingFrog, click “Start.” Before you go grab a Diet Coke, confirm a few things:

- Triple-check crawler access. Make sure you’re not getting an endless stream of 403 errors because the site thinks you’re a malicious scraper. Not that that’s ever happened to me. Check Overview >> Response Codes in the right-hand panel

- Make sure the site’s still working. A ScreamingFrog crawl shouldn’t crash a site. Site infrastructure should handle it. Every now and then, though, it doesn’t. If your site has slowed to a crawl, or it’s gone, maybe check with the engineering team?… Even better, check with the engineering team before you start your crawl

- Confirm embeddings extraction is working. Somehow, I always screw this up. Even as I ran the crawl for this post, I forgot to connect OpenAI. I had to restart the whole damn thing. In the main panel, go to the “AI” tab. Change “All” to “Extract embeddings from page content.” Check the “Extract embeddings from page” column. You should see these weird, fancy-looking coordinates. If you do, excellent! ScreamingFrog is using the OpenAI API and grabbing vector embeddings for each page: It’s figuring out the concepts to which each page relates. Cool, right?

Once you’re sure it’s all working, go grab that beverage. Or go for a walk. A long walk. Depending on the size of your site and the oomph of the computer running the crawl, this could take hours.

Step 4: Export your crawl data

- For simplicity, filter out pagination (I like to crawl those pages anyway because I want the link profile, and I want to make sure they aren’t duplicating anything with thin content). Remember, the AI is going to have to wade through all this data. The more noise there is, the more coaching Claude’s gonna require

- For simplicity, Filter for pages you want to check (again, crawl everything – use filters to reduce the number of pages you’re checking – for example, you might just look at articles, or product pages)

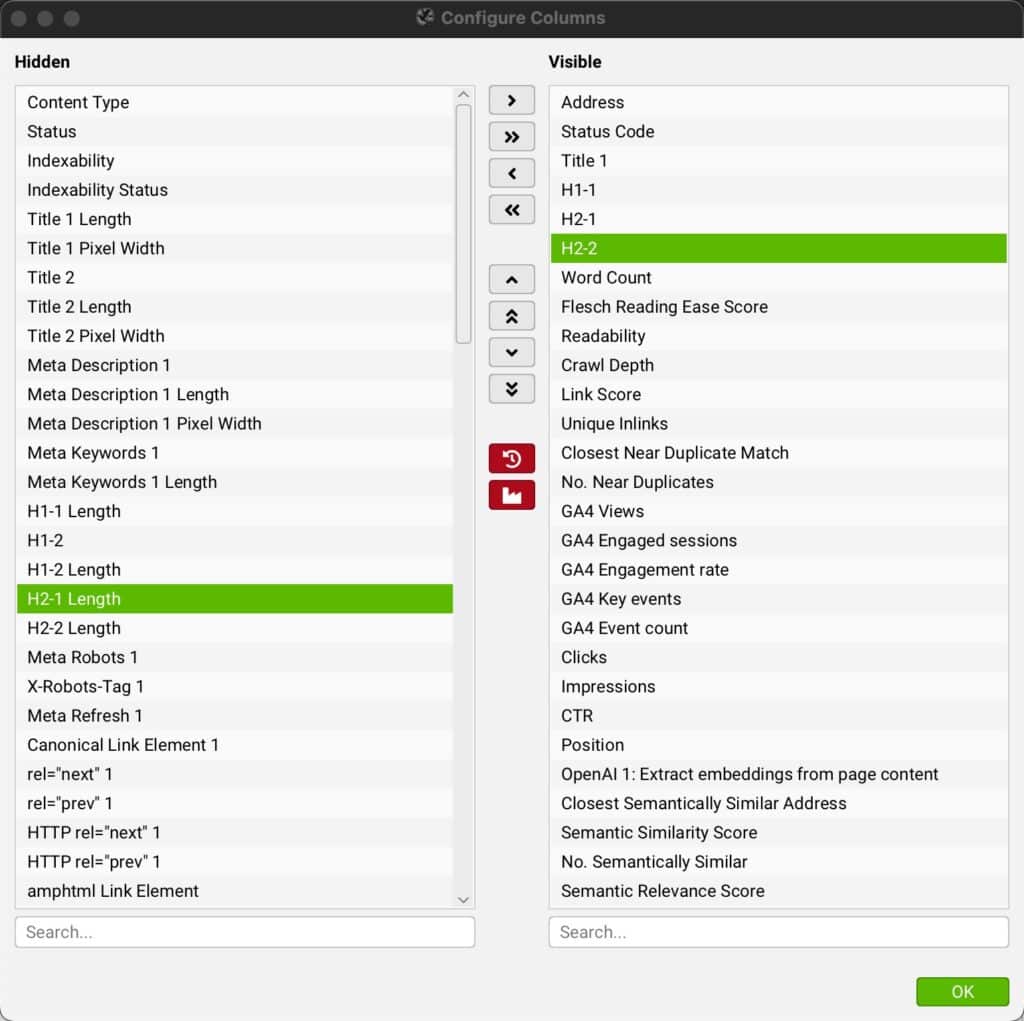

- Limit your columns – you don’t need ’em all. Remember, our goal here is to look for opportunities. We’re not doing a technical audit. The columns I export are on the right:

ScreamingFrog columns I use for my audit

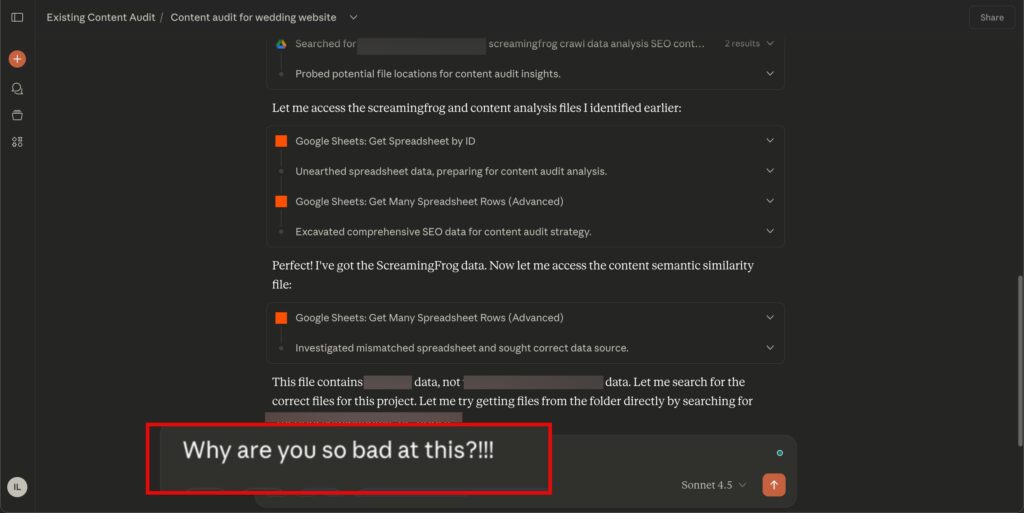

- Go to the Internal tab, change to “HTML,” and export. You can dump those straight into a subfolder of opp-analysis, or export to the default ScreamingFrog folder and make a copy

ScreamingFrog data Google Sheet (formatting added)

OK, but why not just have the AI do the filtering? You can. I’m not rational, and I somehow feel like, by removing this one step, I’m doing my bit for the environment. Somday including “please” in a prompt won’t burn down 10 acres of rain forest. On that day, I’ll have the AI do the filtering. And yeah, I know it’s ridiculous, because the rest of this process probably warms the globe ten degrees. I do what I can.

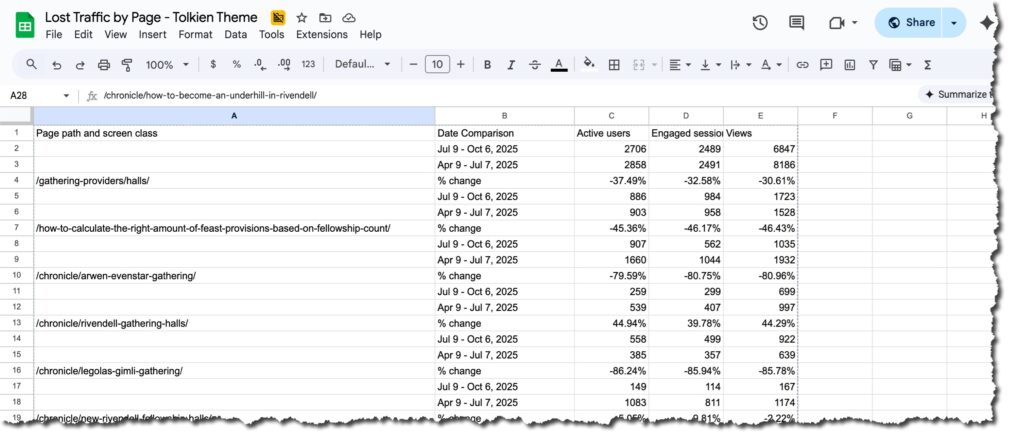

Step 5: Export one Google Analytics report

You need data showing which pages have lost traffic. That’s the first way the AI will find “opportunities.”

For most clients, I create a Google Analytics 4 exploration that looks at active users, engaged sessions, and views. I set the date range to compare the last 90 days to the previous 90.

Lost traffic by page report

Then I export that and put it in the opp-analysis folder.

Interlude: What you now have

You should now have:

- Claude, connected to Zapier MCP, which is connected to DataForSEO, Google Sheets, and Google Drive

- A Claude project using the instructions I noted above, and a separate file with criteria defining an “opportunity”

- ScreamingFrog crawl data, in a subfolder of opp-analysis on Google Drive

- And lost traffic data, in that same subfolder

What you should now have (minus the Tolkien references)

Step 6: Put Claude to work

- Open the Claude project you created

- Use something like this prompt, but please, change as you see fit:

“Perform an existing content audit for thefellowshipofspeciality.com. Ignore all vendor pages and the home page.”

Claude will read opportunities.md and generate its results.

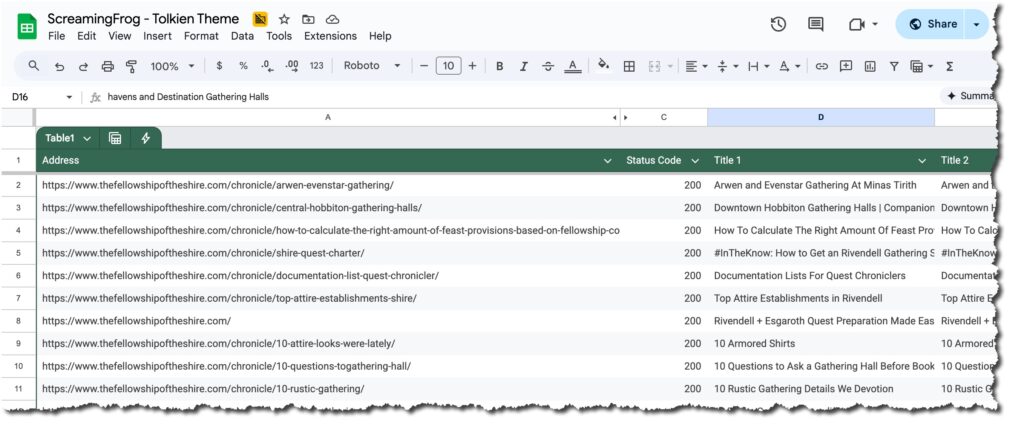

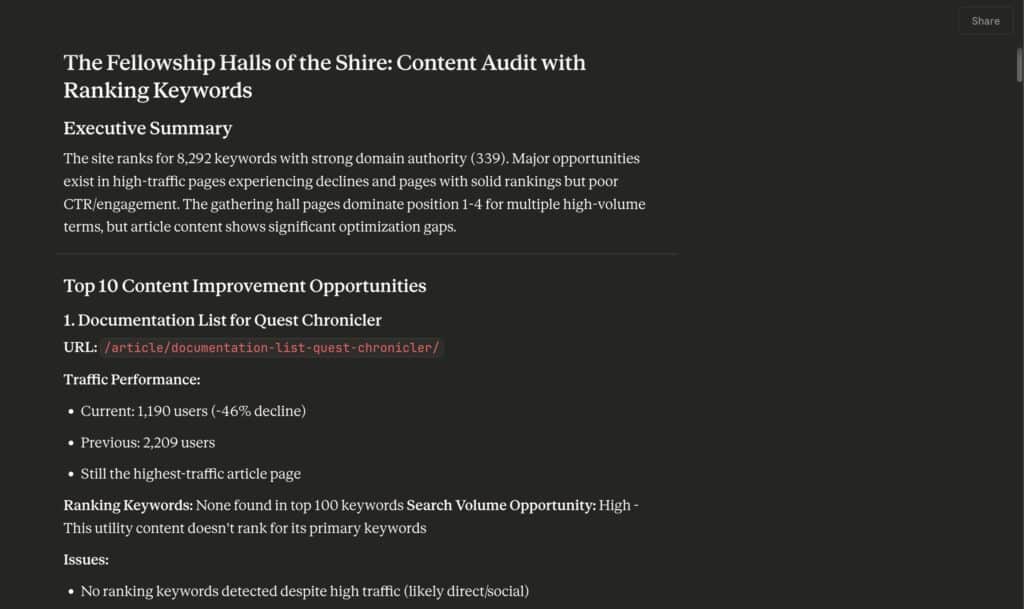

You’ll hate the first results, then spend an hour cajoling Claude to do what you want. That’s still a lot faster than doing it by hand, and you won’t have to do it next time. Here’s what I got:

Step 7: Review

You’ll get something like this:

Example report

You can see the full Markdown here, if you want to peruse:

Don’t just take Claude’s word for it. Review the results. Look at the opportunities Claude listed.

All Claude did was very literally apply your rules. It’s gonna get stuff wrong. Confirm that it correctly interpreted the data, that it didn’t miss anything, and that it didn’t do anything that’s going to embarrass you when you provide your report.

All stuff you’d do anyway. Right? Right?!!!

Step 8: Refine your AI assistant

Yep, I’ll say it again: Creating the process I documented in this post really tried my patience. Artificial intelligence isn’t very… intelligent.

You need to create and guide this AI workflow the same way you would teach a brand-new employee who’s good with data but has never done a content audit, or touched an API, or used ScreamingFrog.

For example, in my first few audits, the AI missed a bunch of pages with high semantic similarity. Those were great roll-up opportunities. So I added that to opportunities-criteria.md.

Repeat after me: AI is a your content audit assistant, not a practitioner. Keep tweaking.

Where To Now?

This is a lot, I know, but I’ve barely scraped the surface, and I didn’t want to drag you through a 30,000-word post.

If you can connect it to Zapier MCP, scrape it with ScreamingFrog, and/or import it into a Google Sheet, you can add it to your content audit process.

Other stuff I do:

- Compare topics to pages and measure relevance. You’ve already got the embeddings, so why not? Eric Gilley at iPullRank has a case study on this

- Compare page and topic intent. DataForSEO can provide intent data

- Pull in client meeting transcripts and notes for additional context. If a client said, “Don’t worry about this section of our site,” why not let Claude know? Zapier has connectors for a lot of AI transcription tools

- Connect to a content calendar and check/schedule content updates

- Use AI to suggest updates and revisions (be careful, please, because AI can’t perform content audits

- Incorporate full content scoring

- Add social signals

- Add AI tracking, so you know when a particular piece of content is cited in AI results. Sort of. Kind of. As much as that works

- Add AlsoAsked.com data to find unanswered questions in existing content

Got questions? Reach out on LinkedIn, Bluesky or Threads.

Find the prompt, instructions, and opportunities.md for this post in this github repo.

Next time: How to exfoliate all those content opportunities.